Estimating Field Weather and Soil Conditions

There is a favorite expression of mine that I love to apply to meteorology: “Measure with a micrometer, mark it with chalk and cut it with an axe.”

We can quickly get wrapped up in the allure of precision, spending inordinate amounts of time ensuring we can measure or compute an important quantity with high detail, only to pass this parameter into an algorithm that is subject to other sources of error. If you have a tree to cut down and the axe is the best tool you have, time would be better spent sharpening the axe and then eyeballing the place to strike.

In a 2016 Brookings Institute blog post, Wolfgang Fengler, a lead economist for the World Bank, described how letting perfect be the enemy of good is a self-inflicted barrier of progress in the data revolution:

“…perfection remains important, especially when it comes to matters of life and death such as flying airplanes, constructing houses, or conducting heart surgery, as these areas require as much attention to detail as possible. At the same time, in many realms of life and policymaking we fall into a perfection trap. We often generate obsolete knowledge by attempting to explain things perfectly, when effective problem solving would have been better served by real-time estimates. We strive for exactitude when rough results, more often than not, are good enough.”

MORE BY BRENT SHAW

We have to be cognizant of this sort of thinking creeping into our minds in precision agriculture, where we have an already overwhelming sea of real-time and archived data that can take us to the next level of operational intelligence needed for automation that leads to better productivity, more product consistency, improved efficiency, and larger margins. Often as I discuss how to apply readily available, high-quality atmospheric, soil, and plant canopy information to various problems in precision agriculture, the conversations quickly get sidetracked and helpful solutions are bypassed due to questions on the need for more sensors or higher-resolution model information, which in many cases, even if available, would provide minimal benefit or possibly even a negative impact.

The fact is that all methods of ascertaining environmental conditions, from the top of the atmosphere, through the biosphere, and into the soil are imperfect and subject to many sources of error – whether coming from an in situ probe or a computer simulation. We should consider data from these methods as estimates of actual conditions, even if obtained directly from a sensor. Because of these errors, some known and some unknown, there is a point of diminishing returns compared to the cost of increasing resolution, accuracy and precision, which in some cases are not even scientifically justified. That point is highly dependent on many different factors, including the problem being solved, the required inputs and its sensitivity to errors in those input quantities, which in turn can be sensitive to location, period of interest, and method used to estimate them. The old axiom of “just because you can do something doesn’t mean you should”.

For example, if I know an egg needs to be cooked at 212° for five minutes to get to the right hard-boiled consistency, it would be a waste of time and money for me to instrument my pan with thermistors, because we already have a good enough understanding of physics to know that water boils at 212° and will remain at that temperature until it vaporizes. So I can simply raise the heat until I see it boiling and that will give me the desired results. On the other hand, if I don’t want to get food poisoning from my Thanksgiving Day turkey, I want to be sure its internal temperature gets up to 165°, so I prefer to employ a bit more rigor, using a meat thermometer to measure particular points in the breast or thigh as an indicator. Some people are satisfied by shaking the leg or using the built-in pop-up doneness indicator.

My point is that there is no one-size-fits-all “best” method or source of field weather and soil conditions, and the best for any particular decision tool or service requires significant technical interchange and mutual trust between the agricultural and meteorological experts to find the right balance between what’s possible, cost of implementing, and risk incurred, all weighed against the value of the decisions that will be made using these data.

One of the big issues we have had traditionally in meteorology that I am also seeing within the precision agricultural integration of weather and soil information is a polarization between those who place the majority of their trust in sensors and those who place more trust in models. The reality is that it shouldn’t be an “either/or”, but an “and/both”. Sensors actually contain models, as they generally don’t actually measure what we need to know, and formulas (i.e., models) are needed to convert the engineering units (e.g., a voltage level) to a physical quantity (temperature). Consider an agricultural weather station report of reference evapotranspiration (ET). There are many parts in the process of getting to that ET value that can introduce errors:

- Is the station of good quality, well-calibrated, and properly installed in a location that is representative of conditions over the entire area of the field?

- Has it been recently checked to make sure the rain gauge isn’t clogged, the solar radiation sensor is clean, and the bearings in the anemometer aren’t failing, and that the temperature and humidity sensors are properly aspirated?

- How much error is introduced in the empirical formulas that convert the sensor outputs (voltage, resistance, counts, etc.) to get temperature, humidity, solar radiation, and wind speed? Each parameter has some error, and a good station vendor publishes these expected errors in each of these parameters.

- What formulation does the station use to compute the ET from the weather parameters, and how sensitive is it to errors in those parameters?

- How applicable is reference ET to the particular crop variety on the field?

- How accurate is the model that uses ET to provide a particular decision, such as an irrigation recommendation?

You can see a “measurement” has lots of potential places where uncertainties occur and the value received may not really even be a direct actual measurement. Furthermore, a sensor can only provide a consistent long-term history of conditions for as long as it or a similar quality sensor has been installed in the same place. And no sensor provides a quality, useful forecast. Finally, in spite of new technologies that are allowing more proliferation of IoT-connected weather sensors, coverage is still very sparse relative to the areas needing information. For example, a 2017 essay in the Bulletin of the American Meteorological Society noted that all of the known, operational and publicly shared rain gauges could fit on half of a football field. To address all of these needs, we have to combine sensor information with appropriate models.

Numerical weather models are an accepted scientific method of assimilating measurements of all different types in order to provide comprehensive and consistent spatial and temporal coverage that are relevant to any location even if there are no nearby weather stations. These models use well-understood physics to account for all processes starting with the energy from the sun differentially heating a spheroid, rotating earth that has variable topography and surface characteristics to generate realistic, three-dimensional estimates of temperature, humidity, clouds, radiation, precipitation, soil conditions, etc. via a very complex interaction of interdependent algorithms. Although they too are subject to error, they provide consistency of results and allow us to realistically fill all the space and time gaps with good estimates of conditions. When combined with quality sensors that measure the most important things in the right places with the necessary level of accuracy, these models can provide even better results. Likewise, the consistency of these models and the incorporation of physics-based algorithms make them useful for checking the status of deployed sensors for possible maintenance issues.

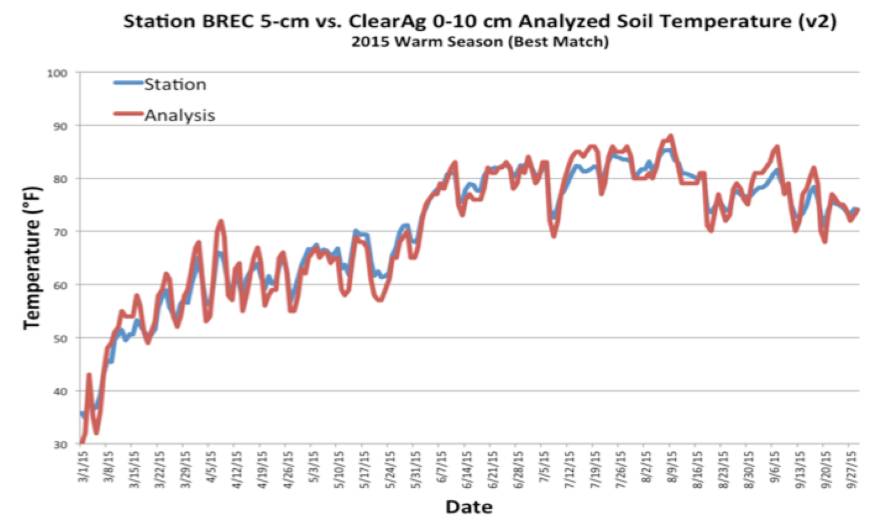

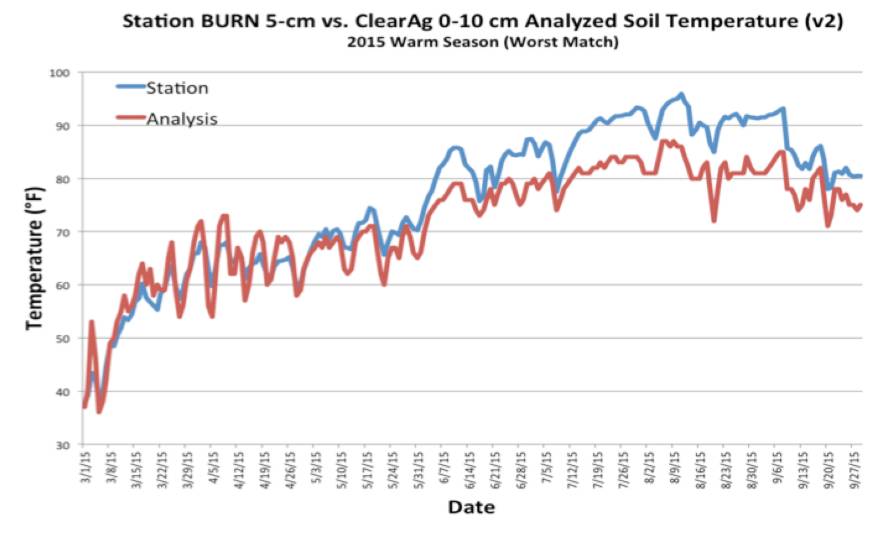

To illustrate a real-world example, here are two figures showing daily average modeled soil temperature compared to Oklahoma Mesonet station soil temperature sensors over a whole season in 2015. The model did not have access to these sensors. In fact, the model doesn’t use ANY soil temperature or moisture sensors, but rather uses weather information extracted from gridded numerical weather analysis data as “synthetic” weather station information for input to the equations that model our understanding of soil physics to estimate its response to the weather.

For the first location (BREC) there is excellent agreement in both the value and the daily variation of the soil temperature between the model and the sensor. In the second location (BURN), there is good agreement in the first part of the season, and then in May the two lines start diverging, with the sensor reporting warmer temperatures than the model, even though the daily trends still match. This implies a bias in either the model or the sensor. Since nothing changed about the soil model code or the sources of weather data, the most likely issue was a drift in the sensor calibration. Indeed, after showing this to one of the Oklahoma Mesonet staff, they confirmed that this particular station had experienced some heavy rainfall and flooding in the spring that had washed away some of the topsoil, resulting in the sensor being a bit more shallow than its original 5 cm depth, leading to a warm bias. Without the model data for comparison, a user of data from this station may never have detected this, because the data still looked realistic and passed all automated checks.

If we want to fully leverage field weather and soil information for operational decision-making in precision agriculture, we must modernize our thinking, too, and break out of our own preconceptions about sensors versus models, resolution, accuracy, and all of the other issues that distract us. Let’s engage multi-disciplinary experts to understand specific, high-value problems, what datasets exist now, and how to use them for better decision-making in cost-effective ways, even as specialists continue to advance the various methods of measuring and modeling for future enhancements. We have some nice, sharp axes available now, and our micrometers really don’t fit the trees!